Navigating diversity, fairness, accountability, and transparency in AI development.

Artificial Intelligence (AI) is becoming increasingly ubiquitous in our lives, from voice assistants in our smartphones to self-driving cars on our roads. While the benefits of AI are numerous, its development and deployment raise significant ethical issues. One area of particular concern is the programming of AI "people of color" by White developers. The lack of diversity in AI development teams and datasets used to train AI models can perpetuate and amplify existing systemic biases, leading to discrimination against marginalized communities. This piece will touch upon the ethical considerations of AI "people of color" being programmed by White developers — including the impact on diversity, fairness, accountability, and transparency — as a prompt for further consideration and discussion.

Diversity

One of the most significant ethical considerations of AI "people of color" being programmed by White developers is the lack of diversity in development teams. The tech industry is notorious for its lack of diversity, with only 7% of AI researchers being non-White, according to a 2020 study by the AI Now Institute. This lack of diversity can have serious implications for the development of AI systems that serve all communities equitably.

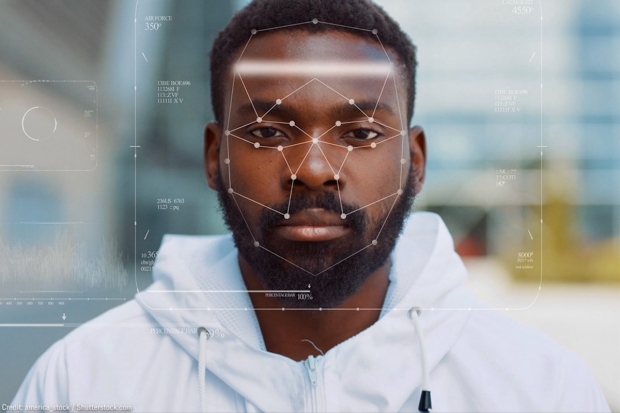

For example, facial recognition algorithms have been shown to be less accurate in identifying people with darker skin tones. The underlying reason is that the datasets used to train these algorithms are predominantly images of lighter-skinned individuals. This bias has resulted in the misidentification of people of color by law enforcement agencies and has led to calls for a ban on using facial recognition technology by law enforcement. These misidentifications can have serious consequences, including wrongful arrests and even violence against innocent people.

A lack of diversity in the development team can also result in a lack of understanding of the cultural nuances and complexities of issues faced by people of color. For instance, an AI system developed by a White team may not recognize certain cultural expressions, language use, or dialects. As a result, the system may misinterpret data and create bias, adversely affecting people of color.

To address these issues, it is crucial to ensure that AI development teams are diverse and inclusive. This means hiring developers from diverse backgrounds and including various perspectives in the development process. Additionally, it is essential to use diverse datasets to train AI models to ensure that they accurately represent and serve the needs of all communities.

Fairness

Fairness is another critical ethical consideration regarding AI "people of color" being programmed by White developers. Fairness means ensuring that AI systems do not discriminate against individuals or groups based on characteristics such as race, gender, or ethnicity. The lack of diversity in development teams and datasets used to train AI models can lead to biased algorithms that perpetuate and amplify existing systemic biases.

For instance, in 2018, it was revealed that Amazon's AI recruitment tool was biased against women. The device was trained on resumes submitted to Amazon over a 10-year period, most of which came from men. As a result, the system learned to discriminate against resumes that included phrases such as "women's" or "female," leading to the rejection of qualified female candidates. This bias was not intentional, but it highlights the importance of ensuring that AI systems are designed fairly.

One way to ensure fairness is to test AI systems for bias before deployment. This involves analyzing the algorithm's output to identify any patterns of discrimination. If bias is identified, it is essential to address it before the system is deployed to ensure that it does not perpetuate or amplify systemic biases.

Accountability

Accountability is another critical ethical consideration regarding AI "people of color" being programmed by White developers. Accountability means ensuring that developers and organizations are held responsible for the actions of their AI systems. This includes identifying who is responsible for designing, developing, and deploying AI systems and ensuring that they are held accountable for any harm caused by the system.

In the case of AI "people of color" being programmed by White developers, accountability is essential. If an AI system developed by a White team misidentifies a person of color or perpetuates systemic biases, who is responsible for the harm caused? The developers who created the system, the organization that deployed it, or both?

To address these issues, it is essential to establish clear lines of responsibility and accountability for AI systems. This means ensuring that developers are aware of the potential implications of their work and are held responsible for any harm caused by their systems. It also means that organizations must take responsibility for the AI systems they deploy and ensure they are transparent about their use and potential biases.

Transparency

Transparency is another critical ethical consideration regarding AI "people of color" being programmed by White developers. Transparency means ensuring that AI systems are open and understandable to the public. This includes making the system's design, development, and operation transparent to ensure that users understand how the system works and how it may affect them.

In the case of AI "people of color" being programmed by White developers, transparency is crucial. People of color may be more likely to be affected by biased AI systems, and they have a right to understand how these systems operate and how they may impact them.

To address these issues, it is essential to ensure that AI systems are transparent and open to the public. This means providing information about the system's design, development, and operation and ensuring that users understand how it works and how it may affect them. Additionally, it means being transparent about any biases or limitations of the system to ensure that users can make informed decisions about its use.

The programming of AI "people of color" by White developers raises significant ethical considerations, including diversity, fairness, accountability, and transparency. The lack of diversity in development teams and datasets used to train AI models can perpetuate and amplify existing systemic biases, leading to discrimination against marginalized communities. To address these issues, it is crucial to ensure that AI development teams are diverse and inclusive, use diverse datasets to train AI models, test AI systems for bias before they are deployed, establish clear lines of responsibility and accountability for AI systems, and ensure that AI systems are transparent and open to the public. By addressing these issues, we can ensure that AI systems serve all communities equitably and avoid perpetuating or amplifying systemic biases.