Peter Plantec explores recent advances in facial animation and crowd simulation software to see how different forms of virtual acting are converging in vfx-driven works.

To state the obvious, animation is and has been at the core of digital VFX since the beginning. Until recently, however, its been mostly about space ships, explosions, oceans, skies and other photoreal deceptions. But as movie making ascends in sophistication, it uses more and more VFX. In fact, were now using them just about everywhere to help moviegoers suspend disbelief. Were now moving into the realm of acting.

Digital animated cartoon characters have never been considered part of VFX because they have a long independent evolution and tradition of their own. Theyre a separate art form. But lately, directors have been using more and more real-looking characters in movies. It started, strangely, with real humans playing virtual actors. For example, Rachel Roberts (III) played a virtual woman in Simone, much like Matt Frewer played the wonderfully synthetic and flawed Max Headroom. But things have come half-circle with virtual actors now playing real people and creatures and theyre good at it.

Autonomous acting, therefore, is the coming thing. These are animated characters that literally animate their own performances. The first autonomous virtual actors were used to simulate smallish, low-resolution background people milling around or flying in vehicles. Some of the people walking the Titanic were autonomous. More recently, youve seen legions of Orks and other critters in The Lord of the Rings, and youll see amazingly sophisticated autonomous hoards in The Chronicles of Narnia: The Lion, the Witch and the Wardrobe and King Kong: animals, people and bugs, all doing their own thing.

Virtuoso hero vfx characters like Kong himself are not yet autonomous, but with the help of animators and technology, theyre bringing virtual acting to the screen in ways only vaguely imagined just a few years ago.

The Holy Grail of digital character acting is to create close-up characters that moviegoers will accept as real. It has been done, and done well, as with Kong, but its extremely expensive, time consuming and requires enormous talent. The big push is to create tools that will make all of this a lot faster and smoother and ideally allow realtime feedback. The technology is advancing at a double exponential rate (a la Kurzweil), and well soon be there.

Legendary cartoon animators like Frank Thomas, Ollie Johnston and Chuck Jones developed an exaggerated acting style that incorporated squash-and-stretch and cartoon physics. Remember Wile E. Coyote running off the cliff and continuing for a second-and-a-half before falling? These artists would mug themselves before little round mirrors clamped to their drawing boards, making the faces theyd then draw on cels. They developed a compendium of rules for cartoon acting. In fact, cartoon characters have as many rules about acting as human actors doonly different. But this new genre of animated human like actors are now replacing real peoplethey have to act according to human rules now. Theyre called synthespians and we love them.

Jeff Kleiser (Kleiser-Walczak) coined that term to describe Nestor Sextone, his very first 3D-animated actor, and its widely used today, along with the more irritating vactor. Nestor cleverly played the part of a synthetic actor running for president of the synthetic actors guild. His body parts were sculpted in clay by Kleisers wife and partner, Dianna Walczak, and then 3D-scanned and assembled into a skeletal hierarchy for animation. According to Kleiser, His joints were formed by interpenetrating parts, because at that time there was no software capable of creating flexible joints. We ended up with something like a plastic action figure to work with.

An interesting side-note is that Poser creator, Larry Weinberg, helped Kleiser design and build Nestors innovative animation system. Sextone made his screen debut at SIGGRAPH 1988 in a well-received :30 short.

As for the present, Kleiser says: Synthespians have come into their own much more quickly than anyone expected five years ago, probably because of the extraordinary success theyve enjoyed in recent feature film roles. In addition to digital stunt doubles, main characters have been created for major feature films like Lord of the Rings, and Revenge of the Sith. With major box office sizzle in the offing, R&D budgets have been ramped up dramatically and the level of realism has benefited greatly. Kleiser and his crew have also been pioneers in face replacement technology (yet another branch of virtual acting). Theyre currently working on Brett Ratners X-Men 3.

Synthespians are evolving into true virtual actors. Were seeing more truly fine emotional performances. I dare you to watch Peter Jacksons overly distended yet in some ways brilliant Kong, and not believe that he has a soul.

Acting coach Ed Hooks, author of Acting for Animators, comes at this from an entirely different perspective:

My sense of the developmental arc of digital acting runs this way: It has now been only 10 years since Toy Story was released. Before that, acting in animation was almost 100% cartoon style. CG created a situation in which animators were forced to start dealing with acting in a more objective and realistic way. Then MoCap arrived, mostly to help the game industry and I think it caused this fixation on photoreal humans. When it comes to photoreal, the development of acting has been phenomenal, but the goal posts keep getting moved farther away. (The better we get, the more they expect.)

There comes a certain point where human behavior moves to the realm of poetry rather than technology. Only recently, for instance, has the industry begun trying to get the eyes right in MoCapped humans. Ive noticed that [theyre] starting to play around with random focus gaze behavior as well. The good thing is that [they] seem to recognize that as a challenge. But even if you can get eyes to have the illusion of random focus, you still have the challenge of coordinating the focus with specific thoughts. And then we get into something like Neuro Linguistic Programming (NLP).

Two Approaches to Virtual Acting

Lets take a closer look at individual digital acting and realistic crowd simulation. The former is close-up and personal while virtual crowds need to blend into the background effectively, giving the impression of many individuals behaving with purpose. The latter saves lots of money and opens many doors.

There is a lot going on in this new field, but lets focus on just a few. Face Robot (www.softimage.com/Products/face_robot/video_gallery.asp) is a high-end facial animation tool currently in development at Softimage. Blur Studios used the alpha version for the U2 music video, Original of the Species (www.mp3.com/albums/649773/summary.html), as well as X-Men characters for a new game trailer. Massive, meanwhile, is the award-winning AI-based crowd simulation program from Massive Software (www.massivesoftware.com/) that just keeps getting better and better.

According to Hooks, If were going to create believable characters, we need tools to do it fast and effectively and the right people to use them.

Face Robot from Softimage

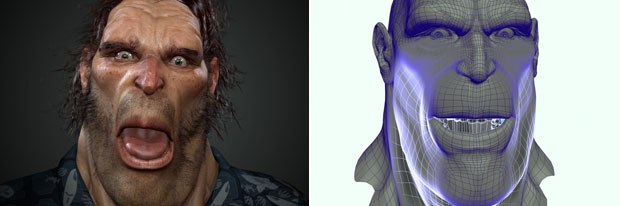

Its still rough around the edges not a full bore application yet but Face Robot represents a serious leap in facial animation. It is designed to make believable facial performance fast and fairly straightforward. I believe it is a tool that cries out for artists familiar with face acting to bring out its true value. Even in the simple animations shown at SIGGRAPH this year, you can see the potential, especially for subtle stuff that imparts personality. There is one bit called Tough Guy that I particularly like where the demo character, Rock Falcon, a cartoonish Neanderthal humanoid, forgets himself and spitsthen gives a subtle embarrassed smile. I bought it. For a second, I even thought the character was really embarrassed from the core out.

OK, you start with a head mesh created in Softimage (or most any high-end 3D software) that can be adapted to the Face Robot system. The animator sets up constraints within the soft-tissue model along with a small number of MoCap markers for the capture. He then becomes an acting director to get the performance from the SAG member doing the actual acting. SAG approves of all this BTW.

Although Hooks was not impressed by the actor in the SIGGRAPH presentation (he indicates) and has certain misgivings (it will never be possible to capture the nuance of, say, sadness, by pressing the sad key), he was impressed by the technology, which is a plus.

Face Robot doesnt apply specific emotion presets to the face; it uses the underlying jellyfish like soft-tissue substrate that the animator constrains to work in ways that are compatible with the character personality geometry.

Michael Isner, Softimages product supervisor on Face Robot, explains: Clearly face acting is becoming more important daily and we think weve developed the breakthrough technology to move that ahead. With Face Robot, you actually build in a physical personality that works synergistically with the MoCap data to yield a true and unique performance. Face Robot actually contributes to that performance. It doesnt merely move according to MoCap data, but according to its own underlying soft-tissue model. This yields unique, remarkably life-like performances with very few markers.

Adds Jeff Wilson, Blur Studios animation supervisor, who worked with the Face Robot development team on the U2 music video for New York City-based production company Spontaneous: Id hit a wall when it comes to face animation. The technology we were using just wasnt giving me what I needed to keep on the cutting edge. Fortunately, Blur is located close to Softimages offices in Venice, California. I hooked up with Michael Isner there and we decided to work tighter on Face Robot. [It] has a really organic feel. Its like IK in a jellyfish. Its really very efficient. Id say you get serious bang for the buck with it.

Face Robot cuts face animation setup time drastically. For example we cut the number of MoCap markers needed for Bonos face in the U2 video, from 110 to 32. I did a little hand animation for parts of Bonos mouth for the video. Its impossible right now to really capture mouth movement to my satisfaction. We did the entire video in seven weeks, which is pretty amazing.

The other thing about Face Robot is that in addition to its own unique features, it also has all the tools of SOFTIMAGE|XSI character animation built in. Its a high-end standalone that will surely be integrated into many pipelines in the near future because it gives great performances with minimal MoCap data and short development times. So definitely keep your eye on it.

Behavior

Softimage has another product that you may not be familiar with. Its called Behavior. They refer to it as intelligent digital choreography, and it is included with SOFTIMAGE|XSI Advanced 5.0. It has a development environment designed mainly for tds (and requires a lot of scripting).

Behavior is a bit esoteric for many artists to use but very sophisticated and powerful. Frankly, I havent used it, but Im familiar with its principles, having reviewed the now obsolete product its based on: Motion Factory. It uses smart finite state machines; which are AI behavior models based on input/output and transition states to control individual crowd characters in very sophisticated ways. It also has many tools like terrain following and rag-doll dynamics needed to control armies. According to product supervisor Yotto Koga, Behavior is far more sophisticated than many people realize. You can achieve remarkably realistic autonomous behavior with it. Were working on making it fully accessible to artists now. Behavior is tightly integrated into the XSI platform and, as more and more crowd behavior is being used in movies, people are starting to realize how useful Behavior is.

I had given up on Softimage some years ago when I thought it got into the wrong hands for development. All that has changed. With XSI Advanced 5.0, Face Robot and Behavior, as well as a new strategic partnership with Pixologic (Zbrush2), Softimage is working hard to retake its position in the 3D world of character animation.

Massive Appeal

Stephen Regelous is an amazing New Zealander. Hes not only a brilliant programmer; hes also an artist and animator. Sometimes it is these special people that lead us toward the future. Having already won the advanced technology Academy Award for his Massive crowd animation software, hes taken smart steps to create possibly the most intelligent (literally) and cost effective solution to believable large crowd simulation ever, Massive 2.0.

Massive 2.0 agents can see and hear and remember and behave intelligently and with personality. You can have an army of agents all behaving in individual ways and reacting to whats going on around them. With his new product, Massive Jet, you get lots of out of the box, easily customizable agents ready for action. The cost and time savings are significant and the ease of development has been increased significantly.

How is it done? Lets say you need an army of people milling around the streets of Manhattan. You can get Massive Jet with its off-the-shelf agents, set up your 3D character geometry and your environmental constraints and set the thing in motion without even having to create any brains for your characters. Now lets suppose you need some special behaviors. You can read the manual and take the Massive tutorials, learn how to script your own brain, but you dont want to do it from scratch. Take one of the existing agents and modify it to your needs. Yes, you have to be a bit technical, but you also need to have some acting sensitivity.

According to Regelous: Massive has had very advanced features from the beginning, but it takes time for users to realize what can be done with it. One of the things Im enjoying now is seeing what artists are able to do with the software. Im impressed with what theyve accomplished in this latest round of films. We keep making it more powerful, increasing the degree of physical interaction and what we call Smart Stunts, which make it relatively easy for artists to setup complex believable character interactions. The Smart Stunts create agents that use rigid body dynamics and blend transitions of MoCap data. Say a soldier takes a dive at another; at the point where they impact, they normally turn into rag dolls, but directors expect real performances at that point. In Massive, the smooth blending of dynamics, MoCap and hand animation makes that possible.Im especially impressed with what the team at R&H has done with that in Narnia.

Massive was a key enabling tool in both The Chronicles of Narnia: The Lion, the Witch and the Wardrobe and King Kong, and youll see lots of it in two animated features from Warner Bros. in 06: George Millers Happy Feet (Animal Logic) and John A. Davis Ant Bully (DNA Prods.). Although its a piece of cake to use off-the-shelf agents, these guys created dozens of unique brains designed to meet the specific demands of the director.

Lets focus on the team at Rhythm & Hues and their Massive work on Narnia, supervised by Dan Smiczek. I assembled the most incredible team from tracking, effects and character animators. Massive requires a multidisciplinary approach. We got guys fresh to the industry, and we included experienced people too. There was a lot of interaction and what you see is truly a cohesive team effort.

In fact, their Massive agents could be repurposed so quickly and put into new situations so efficiently that it allowed director Andrew Adamson to drastically reduce the number of expensive extras with prosthetics, and to try out some things that would have been impossible without the Massive agents.

Nobody really understands my job, Smiczek admits. You have to educate people how Massive works. Its a unique kind of animation. Working with Massive is very front end heavy. People want to see results right away and Massive doesnt work that way. You cant show much at first, but once youve done the setup you can put out a tremendous amount of work with very short turnaround times.

Not having experimented with Massive 2.0, I was curious about the general process. We create a template agentkind of a generic brain that can be modified to meet the demands of each character type, Smiczek continues. Each td would then take that core agent and adapt it to the agents they were working on, customizing the behaviors as appropriate. In the end, we rendered 455,704 unique characters no two alikethats pretty amazing when you think about it. Of course, the Massive software made that possible. You can apply a few basic agents to an entire army of characters and it will vary the behaviors in logical ways, making each one behave a little differently.

To build our MoCap library, we did 22 weeks of motion capture with all sorts of actors and even an NFL player and a horse. We designed and built 50 individual massive agents. The horse was MoCapped as half of a centaur. We used two brains for it one for the horse behaviors and one for the human half, blending the performances seamlessly. We also keyframe animated the leopard, cheetah, hawk and gryphon agents.

Now Consider This

Clearly two major VFX technologies are in rapid convergence. One technology provides tools to create synthetic actors that can be animated by talented people to give wonderful, authentic human-like performances. This is an extension of traditional animation. It is represented by the hero characters in Narnia and by Kong himself in Kong Kong. Ready for primetime, they work directly in front of the camera. Weve had this technology, which includes Maya, Max and MoCap, for a while and its getting better daily. Group all of these processes into the first kind of technology: labor intensive character animation.

The second technology is represented by the smart characters in Narnia and Kong, developed by the Massive teams at R&H and Weta Digital. The labor in this technology lies mainly in setup and building behavior libraries, but everything that gets done is reusable and quickly adaptable to new characters. Except for final performance tweaking, the final render is created autonomously according to the script. Albeit, the script is written in technical terms, it still follows screenwriter/director instruction much as actors with paper scripts do. Believable performances have been achieved with this technology.

Autonomous virtual actors already stand right behind frontline human actors and animated hero characters, and theyre doing a good job. Consider that Regelous has incorporated sophisticated blend-shape face animation technology into Massive 2.0s pipeline. Somebody is thinking about autonomous face actingright? Clearly what was once crowd simulation software has become far more than that and Massive Software is not sleeping as we speak. In fact, Regelous promises much closer interactive performances in the future.

Next consider that Face Robot, like MoCap, works in both worlds, adding autonomous performance elements to traditional animation. I suspect well soon be able to set up autonomous face performances in advance and have them run according to script, automatically blending MoCap components as necessary. Remember, the soft-tissue model interpolates all the MoCap input, refining and contributing directly to the performance. Id like to see Face Robot eventually take plug-in personality modules that would mediate the soft-tissue contributions to reveal more subtle aspects of character. No push button emotions here.

The next logical step for Softimage would be to unite Face Robot with Behaviors autonomous finite state engines. In fact, Ive been told that all of the facilities in SOFTIMAGE|XSI Advanced are already available to the Face Robot animator! Its all about letting the talented vfx animators have at it now very exciting stuff.

Think about the implications this line of development has for our field. All sorts of new skills will be utilized. Im going to shinny out on a limb and say that the convergence is already upon us. Next year might even see at least one major effects movie featuring one or more autonomous actors with speaking parts. I might be off by a season, but not more. Im betting we will soon have major stars who are entirely synthetic and who basically work for nothing from the script. I can see it nowwell need to build robots to sign the autographs.

Peter Plantec is a best-selling author, animator and virtual human designer. He wrote The Caligari trueSpace2 Bible, the first 3D animation book specifically written for artists. He lives in the high country near Aspen, Colorado. Peters latest book, Virtual Humans, is a five star selection at Amazon after many reviews.