Ron Diamond discusses Cartoon Movie 2001 and the band of U.S. delegates that attended looking to increase the opportunities of bringing European animated features to America.

SIGGRAPH is an incredibly good venue for catching innovative technology from the world's largest laboratories. Researchers and college students alike bring their theoretical research projects to the public in the Enhanced Realities displays; while the theories of previous years can be seen being developed into commercial applications on the main Exhibit Hall floor. While very few of the research labs, universities and government agencies had their own booths, they were prominently featured as industry partners of companies who were marketing spin-offs of the R&D technology or selling the scientific community the tools of their trade.

MuSE Technologies and NASA

The most spectacular display of technology and science that I saw was at the NASA press conference where NASA engineers presented a four-dimensional, real-time simulation of the International Space Station and outer space fly-bys of Jupiter and its moons. Michelle A. Garn, Aerospace Vehicle Design and Mission Analysis Engineer, and Dr. Creve Maples, CTO, MuSE Technologies, Inc., as well as other technicians, demonstrated live, via a computer video conference hookup, the interactive working environment MuSE Technologies created by completely integrating all of NASA's databases, including legacy Fortran code from the Sixties.

The demonstration began with a simulated fly-by of Jupiter and its moons. The presentation used lightweight polarized glasses rather than a heavy VR headset to achieve a three-dimensional view of the large interactive screen. As the space probe neared Jupiter, the real-time orbits of all the moons were plotted with the moons' current locations, which were then constantly updated in real-time. At the same time as the orbital data was being accessed and displayed, all current knowledge of the planet and its moons' chemistry, geology and other physical characteristics were instantly accessible from the NASA databases by clicking on the object about which you desired to have data.

Next, they switched to a real-time simulation of the International Space Station in earth's orbit with payloads and interactive human models. This live demonstration was conducted with multiple participants thousands of miles apart, linked via the Continuum collaborative network.

This simulation is one of the most complex software integration projects ever undertaken by NASA. Within the simulation NASA engineers were able to test and manipulate the orbital dynamics of the space station, fly re-entry vehicles back to earth, avoid obstacles in docking procedures, test the effectiveness of on-board instrumentation and explore the dynamics of human interaction in different situations. Currently 117 different data sets for experimentation can be accessed. In terms of dollar savings and human safety, this project has incalculable value in designing the actual space station and training the personnel who will be running it.

The Newest Virtual Model Display System

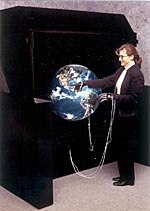

Fakespace has taken the virtual reality experiments that were premiered at SIGGRAPH in years past and turned them into a solid commercial reality. At this year's show, Fakespace demonstrated two Virtual Model Display (VMD) systems, including the Immersive WorkBench in the Sun Microsystems, Inc. booth and the first public demonstration of the new VersaBench in the Fakespace booth.

Fakespace VMD systems enable engineers, designers, researchers and military commanders to view and manipulate realistic 3-D computer-generated models as if they were real objects. In conjunction with Sun Microsystems, Fakespace designed an interactive model using a beta version of the Java 3D programming environment. The demonstration illustrates the power of a VMD to facilitate intuitive interaction with 3-D visualizations of data sets.

The Immersive WorkBench Java 3D implementation in the Sun booth, driven by Sun's Ultra 60 workstation with Elite3D graphics, is a 3-D visualization that enables the user to arrange "virtual" furniture on a tiled floor. Models of chairs, lamps and dressers can be selected from a moving palette and placed into the "room" using a stylus and the PINCH glove interface system. The virtual models can be selected and arranged in the room as if they were real physical models.

In its own booth, Fakespace was demonstrating its newest virtual model display system, the VersaBench, driven by a Silicon Graphics Onyx 2 InfiniteReality visualization supercomputer. The VersaBench, originally developed for the U.S. Naval Air Warfare Center Aircraft Division (NAWCAD) at Patuxent River, Maryland, is the first VMD to support solid state projection technology and passive stereo viewing. The system has a compact footprint relative to its large viewing surface, an adjustable viewing plane that moves from horizontal to full vertical, and exceptional brightness. Fakespace is demonstrating a variety of applications on the VersaBench including earth science, computational fluid dynamics and mechanical design simulations.

What I found to be the most impressive feature of Fakespace's many applications, was ease of use. The gloves were lightweight and flexible, not cumbersome and robotic as early implementations were. The glasses for viewing simulations were no heavier than ordinary sunglasses and all the projection controls were built into the table eliminating the need for heavy headsets. This makes working on collaborative projects easier since you can turn to the person beside you or look down at your notes and actually see them, rather than being trapped in a heavy VR helmet.

Advanced Visual Systems

Advanced Visual Systems (AVS) is the premiere global provider of data visualization solutions. AVS software and services help companies transform massive quantities of data into visual representations. The company provides tools for developers who build customized visualization software, as well as ready-to-use applications for end users who analyze information contained in graphical representations.

Among the industries represented at their booth were:

Oil and Gas Exploration

The largest oil fields and greatest deposits of natural gas were found years ago. Now the petroleum industry faces the daunting challenge of searching for much smaller pockets of these natural resources, which frequently are hidden deep beneath the earth's surface. In addition to finding new deposits, emerging technologies make it possible to optimize existing finds, extending the life of many oil fields by as much as two decades, through improved analysis and recovery methods. Scientists and engineers must interpret vast amounts of seismic data with absolute precision before they can both confirm a reservoir or make decisions about managing existing assets in the ground.

A subsidiary of Petroleum Geo-Services of Norway, PGS Tigress Limited of Berkshire, England has developed a product suite called 3-D Visualization and Grid Management. Geologists, geophysicists and reservoir engineers from dozens of the world's largest petroleum and gas producers rely on these technical applications to analyze oil reservoirs, model oil reserves, and simulate production.

NOAA Weather Prediction Models Weather forecasters in today's National Weather Service (NWS) have more data from more sources than ever - and that only makes a tough job tougher. At more than 120 NWS offices around the country, forecasters' detailed reports are relied on by mariners, airlines, farmers, shipping companies, and the military. Of course, they also serve as the basis for most public weather forecasts.

"Three-dimensional visualizations," says Philip A. McDonald, one of CIRA's chief research associates for the D3D project, "bring to meteorology what voices brought to silent films: a vivid approximation of real life." And real life is precisely what meteorologists are trying to approximate as they collect two-dimensional 'slices' of weather and mentally stack and analyze them. With 3-D visualizations, users can virtually surf through the weather, seeing it in a color-coded, high-resolution format. It's like the difference between looking at a road map and driving down the road itself. The goal is to relieve meteorologists from having to do mental calculations with 2-D images and let them concentrate on the actual numbers needed for forecasts.

SGI Industry Partnerships

In their huge display area, Silicon Graphics had an entire stage set up to accommodate film clips from their industry partners, illustrating scientific and technological research and implementation powered by their computer systems. Under the general theme 'Power of Insight,' the companies featured there were: NASA Ames Research Center, Space Imaging, Jet Propulsion Laboratory, Stanford University Medical Center, University of Washington, and my favorite, the America's Cup Yacht Design.

Technology Frontiers in Enhanced Realities

One of the main aims of Enhanced Realities is to expose people to new techniques that have the potential to become mainstream applications in the near future. For this section of SIGGRAPH more than 50 proposals were submitted to the juried panel who narrowed the field to 17 presentations. The main focus of this year's exhibits were new ways to interact with data sets. We saw devices that ranged from cyber slippers to teddy bears to whole-body interactions. Here are a few of the projects that bear watching as the concepts they illustrate become more developed.

HoloWall: Interactive Digital Surfaces

HoloWall is an interactive wall system that allows visitors to interact with digital information displayed on the wall surface without using any special pointing devices. The combination of infrared cameras and infrared lights installed behind the wall, enables recognition of human bodies, hands, or any other physical objects that are close to the wall surface. Light motes and virtual insects are attracted by and cluster were the bodies are positioned.

Mass Hallucination

This imaging display changes according to the number of people watching it, their behaviors, and whether they've watched the device before. This display captures video along the same optical axis as video is displayed, so images of observers can be directly manipulated, composited or distorted on the display.

inTouch

Touch is a fundamental aspect of inter-personal communication. Yet while many traditional technologies allow communication through sound or image, none are designed for expression through touch. The goal of inTouch is to bridge this gap by creating a physical link between users separated by distance.

Virtual FishTank

The Virtual FishTank is a simulated aquatic environment featuring a 400 square-foot tank populated by whimsical and dynamic fish. Participants can: create their own fish, design behaviors for their fish, observe their fish interacting with other fish, manipulate behavioral rules for a group of fish, discover how these behaviors can emulate schooling and analyze emerging patterns. Through real-time 3-D graphics, visitors are introduced to ideas from the sciences of complexity--ideas that explain not only ecosystems, but also economic markets, immune systems, and traffic jams. In particular, visitors learn how complex patterns arise from simple rules.

Haptic Screen

Haptic Screen is a new force-feedback device that deforms itself to present shapes of virtual objects. Typical force-feedback devices use a grip or thimble, but users of Haptic Screen can touch the virtual object without wearing such a device. Haptic Screen employs an elastic surface made of rubber. A 6X6 array of 36 actuators deforms the surface and controls its hardness according to the force applied by the user. An image of the virtual object is projected onto the elastic surface so that the user can directly touch the image and feel its rigidity.

Natural 3-D Display System Using Holographic Optical Element

In this natural 3-D display system, a holographic optical element (HOE) overcomes conflicts between convergence and accommodation. Users experience clear stereoscopic vision, without glasses, of a broad field of view. With its multiple-focus HOE, the system offers two pairs of viewing ports in back-and-forth or horizontal locations.

Natural Pointing Techniques Using a Finger-Mounted Direct Pointing Device

Pointing with the index finger is a natural way to select an object, and if it can be incorporated into human-computer interaction technology, a significant benefit will be obtained for certain applications. Based on an infrared signal power density weighting principle, a small infrared emitter on the user's finger and multiple receivers placed around the laptop screen generate data for a low-cost microprocessor system.

Stretchable Music with Laser Range Finder

Stretchable Music with Laser Range Finder combines an innovative, graphical, interactive music system with a state-of-the-art laser tracking device. An abstract graphical representation of a musical piece is projected onto a large vertical display surface. Users are invited to shape musical layers by pulling and stretching animated objects with natural, unencumbered hand movements. The project uses a scanning laser rangefinder to track multiple hands in a plane just forward of the projection surface. Using quadrature-phase detection, this inexpensive device can locate up to six independent points in a plane with cm-scale accuracy at up to 30 Hz. Bare hands can be tracked without sensitivity to background light and complexion to within a four-meter radius.

Gesture VR: Gesture Interface to Spatial Reality

In this demonstration, users interact with spatial simulations by means of a novel hand gesture recognition interface technology developed at Bell Labs. A freely moving, gloveless hand is the sole input device. Image sequences of the user's hand motions, acquired by video cameras, are processed by a computer program that recognizes gestures and calculates the hand's parameters. This information is used for precise control of navigation in 3-D space, for grasping and moving objects on the screen, or to provide a new kind of interface in video games.

MAGNET

MAGNET, France Telecom's research and development project for streaming, interactive multimedia, is an implementation of VRML97 and MPEG4 for scalable platforms in telecommunications environments.

MAGNET will enable delivery of a media-rich environment over very low, consumer-available bandwidth such as 33K modems. Because it implements the VRML97 and MPEG4 standards, MAGNET represents a near-future technology that will be widely accessible to an Internet consumer audience, business intranets and extranets, and content creators. The compression capabilities in MPEG and binary encoding for VRML will demonstrate the exploitation of this low-bandwidth medium. The MAGNET architecture is scalable, and future work will include implementations for scaled-down clients such as laptops or even smaller devices...

For SIGGRAPH participant contact information one may purchase copies of the SIGGRAPH '98 Program and Buyer's Guide by contacting the following source:

ACM Order DepartmentChurch Street StationP.O. Box 12114New York, New York 10277-0163 USA(800) 342-6626 (phone, U.S. and Canada)(212) 626-0500 (phone, international and New York metro area)(212) 944-1318 (fax)orders@acm.org

Other technical materials are sold after SIGGRAPH as well and can be obtained through the above address.

Linda Ewing is a geophysicist with nearly 30 years of experience in the petroleum industry and possessor of a lifelong interest in space exploration. She is also staff editor of Visual Magic Magazine, a monthly publication focusing on the 3-D graphics and digital effects industries. You can reach Linda by e-mail at linda@visualmagic.awn.com