J. Paul Peszko looks into emerging technology and discovers that we aint seen nothing yet.

The year: 1927. The movie: The Jazz Singer. Al Jolson utters the immortal line: You aint seen nothing yet, heralding the first major innovation in cinema since its inception in the late 19th century. Fast-forward nearly 80 years, and if Jolson were around today, he would have to shout his line even louder. Yes, it has taken that long for cinema to embark on its second major innovation. Wait, you say. What about all the special effects, Dolby sound, and CG, for goodness sakes! Arent those incredible innovations? Incredible, yet still comparatively minor because until now film has remained a two-dimensional medium, but all that is about to change.

You know movies really havent changed from the beginning. Were still caught in this paradigm of a secession of still frames, and thats not really how our minds work. And thats really not how our visual system works. Our visual system is really a hallucination that our brains resolve through the eyes. This software does a very good job of analogizing that process, asserts John North, director of business development at FrameFree, talking about an innovative process known as FrameFree Studio. North is one of several developers we interviewed about various emerging technologies that have put cinema on the verge of becoming a realtime, multi-dimensional, interactive form of entertainment.

To begin, what exactly is FrameFree? According to independent director John Gaeta, who used the technology on his short, Homeland, FrameFree is a very powerful non-linear interpolation technology that produces very clean, somewhat organic-looking results. It seems like it passes many of the optical flow type technologies that have been incorporated into past effects, and I've backed the flow approach on many. Essentially its the next-gen morpher.

Gaeta, who was the visual effects supervisor on The Matrix, which garnered him an Oscar, adds, What's most interesting is that they're [FrameFree] presenting user interfaces very, very simply so an artist can do what appears to be very complex interpolation effects from a more creative angle.

Just how simple is it? The current process is not involved at all, states FrameFrees president, Tom Randolph. Our process is new. There has never been an imagery synthesize like FrameFree Studio before. We have a native file format that retains the extreme compression ratio up to 1/5,000, but we can also dump frames to BMP, JPG, TIFF, PNG and AVI and QuickTime.

How exactly does it work and what does it do? FrameFree takes dual images and creates a transformation between images through a pixel mapping process, explains North. The file between the two frames is incredibly small no matter how large the image is. The software can take 4096 x 4096 images and do a transform map between them and the file in between is still 2K. All the way down to a 320 x 240 for a cell phone its also 2K. It doesnt matter how much time you spend to go between the two frames. You can go between the two frames in a second. The file size is exactly the same as if you spend an hour to go across them.

Amazing as that sounds, Randolph adds, We can live in our new world or support the old world. The difference is the file size. Our projects that are 2MB may be 100MB for Windows Media. You lose the amazing compression but you still retain the quality in the discrete world. Our world of imaging is equational so we carry no data. We generate data as we choose to at the time we need it.

FrameFree has seven different levels or authoring platforms depending upon the target. It uses your standard paradigm, timeline authoring tool with layers, and you can put multiple keyframes on each level, continues North. Then you can have relationships between different layers. So, you can have pretty complex movies.

The first two platforms are at the mobile phone level, which is 320 x 240 two layers. FrameFree Studio Brew is specifically for the Qualcomm Brew platform. There is also a platform for other mobile phones, but the Brew platform is the first one that FrameFree has been certified on.

The next levels are FrameFree Studio Express and Studio Pro. They are for producing media for the web. The highest display resolution is 640 x 480. The difference between the two is the number of layers. Studio Express has two layers while Studio Pro has four.

The next levels are FrameFree Studio SD and FrameFree Studio HD. These platforms are for television, and each one has 10 layers. The resolution for Studio SD is 768 x 576. The resolution for Studio HD is 4096 x 4096. We use FrameFree Studio HD to collaborate with Hollywood, Randolph adds. This tool is unique. It takes high-res photos and makes movies from a few photos provided in resolutions up to 4096 x 4096 or 16 megapixels. You can now easily synthesize reality from photos and make movies that look like reality just by math. Our tool can make a 30-sec movie in about 15 minutes. Even huge projects take less than a week. It is simply magic.

And what happens if you play SD and HD on the web? North explains: When youre looking at it through your browser, were applying this transformation of this 2K in between file in realtime. But if youre going to build any element that youre going to incorporate into a video abstract or a film abstract, you need to get them out in frames so that you can composite them in the normal way. So, we have an internal module called Slice. Once you determine the precise length of time that you need, then you can slice the frames and export them into your compositing system.

The seventh level is FrameFree Studio DP for Digital Poster. This is another version of the invented player model. But instead of being a web player its a node-locked player for fixed media screens like those in a mall or an airport. So, weve got the ability to have a centralized control of a media campaign, North says. The resolution is 1920 x 1200 which just happens to be the full raster of widescreen LCD displays.

North explains that an author can start with the highest resolution and scale it down for the various media. Because we have this Write-One, Publish Anywhere capacity, if you have one file that youre authoring in HD, theres no reason why you cant take the same file and dial it down for TV, dial it down Digital Poster, dial it down for Express, and dial it down for the phone in terms of its output. So, thats one of the key features of this process. However, North cautions, The other distinction I want to put in here up front is, as you go down those target devices, there are also layer restrictions. So if youve got a 10-layer SD movie and you want to output that for the web, youre going to have turn it into a 4-layer movie so we dont clog up the renderer in the web browser.

FrameFree Studio HD is a site license. All the other platforms are sold online starting with a one-month subscription of $50. Its a Windows only authoring platform, where you drag and drop files into the authoring dashboard and from there you use the various layering tools to build a 3D object from 2D images. To see how Frame Free looks, go to www.framefree.com and download their Active-X utility. Then click on Samples and go to the first level marked Advertising and Branding. You can click on any of the icons. Every one of these images (in the Samples gallery) is made with just the stills, suggests North, Theres a transform calculation thats occurring between multiple layers of multiple stills. Norths favorite is the one the top right, Paul Blains Vacheron spot. Its hard to believe this 50-second film was done with all stills and a little math.

Gaeta describes how he came to use FrameFree. I had the technology in the back of my head for about a year, and then I had the opportunity to do a short film called Homeland. I needed to do an underwater scene. But the nature of Homeland is that it propagates forced hypnosis. So, I wanted something a little more surreal than just shooting people in a tank. Plus, I really couldnt get an underwater shoot off the ground. So, I decided to do it somewhat stylistically and use FrameFree to create a strangely nuanced underwater look.

We shot incremental stills of the actors, dry, over greenscreen. We then used FrameFree technology to achieve a surreal underwater look. N Design then took those enhanced/processed performances and placed them into a mystical underwater environment. So the end result is a collaboration between FrameFree and N Design. N Design is a cutting edge Japanese company that you will definitely be hearing more about in the future.

Gaeta is additionally developing new technology to create and render CGI in realtime and HD. Along with partner, Rudy Poat, a creative director at Electronic Arts (EA), and some friends, Gaeta is pioneering a company and a project called Deep Dark. Their first experiment is in Gaetas segment of the horror anthology, Trapped Ashes, to debut at the Toronto Film Festival. The feature is comprised of four stories told by four people trapped together in a room. Ken Russell helms The Girl With Golden Breasts. Sean Cunningham directs Jibaku. Monte Hellman helms, Kubricks Girlfriend. And Gaeta pilots My Twin, The Worm. Joe Dante supervises the wrap-around portion of the film.

In Trapped Ashes I needed to visualize a woman characters imagination of something that might have been happening in the womb, explains Gaeta. Its the fear of the unknown thats taking place in the developmental stages of her unborn child. Rudy and I worked on the scene in the first Matrix called the History Program. Hes a genius alchemist when it comes to creative shader design. At Electronic Arts, hes spent all of his time since the first Matrix getting into the technology of games and interactive graphics. And thats a subject Ive been very interested in myself for quite some time now. So, I thought it would be a good idea to reconnect with Rudy in an on-the-side collaboration.

Gaeta asked Poat to devise a process where they could animate, compose and render this baby in utero in realtime and in high definition. We worked on the technology and debuted it in the film, adds Poat. At the heart of the process is a way of layering shaders together in a form that no one is doing. Utilizing game world artificial intelligence (AI) logic and bundles of multithreaded graphics cards, images that would normally take several minutes or hours per frame to render can be created in realtime. Because this process combines proprietary and open source code with off-the-shelf video cards in a single package, it is a cost effective solution, which is easily scalable to the needs of the production, Poat explains. It can also perform realtime rendering in a procedural way using the same game logic libraries on secondary characters, environments, particle systems and other scene components.

Poat talks about the distinctive look that he and Gaeta were after in Trapped Ashes. Its got a different texture, a different feel. A lot of the stuff were going after has got more of a membrane soft-skinned, undersea feel. We tried it on this film and it worked. Every shot in [Gaetas segment of] Trapped Ashes runs in realtime and interactively as well. You can move the camera around, you can move the lights around, you can move around it in realtime. We just hit a button and say record. Poat thinks that many filmmakers will be impressed. Weve got everything from realtime AI to realtime multi-layered shaders to realtime compositing, all running at a level where an art director will say thats not like anything Ive ever seen in games.

The software that Poat uses has a graphical user interface that can control all aspects of lighting, shading and behavior in an animated scene. For example, consider a character with skin and hair wearing a cloth coat. Normally, all of these elements would take time to individually calculate, frame by frame, their appearance and behavior and how they react with one another. With this [technology], the computer artist and director can sit together and manipulate these elements in a plastic, fluid manner that can display up-to-the-minute changes as they work with them. One of the key aspects is its powerful level of flexibility which enables the artist to create either entire finished scenes or just the layers required, rendered out in separate passes for later use in another composite environment.

Of course, many filmmakers have been concerned with how to get high-range dynamic imagery in realtime. For Gaeta, this is one of the most interesting frontiers in graphics. It has major implications for a number of things: how you can essentially make movies in the future, how you can make high-quality diverse interactive entertainment and how you can potentially make content with an interactive platform that is actually of high enough quality that you can output to film and release it in a wide-screen passive piece of entertainment like a feature film. Having been created in this way, this same content is interactively accessible at a match fidelity and can be directly applied to a game-like experience. This amounts to mirroring a 2D cinematic passive moment with a 3D interactive moment. Once mirrored and interactive, the possible expository and universe of embellishments become somewhat limitless. For this reason, the Trapped Ashes experiment is interesting to say the least.

Im not entirely positive about this," Gaeta posits, "but I believe that the Baby in Utero created for Trapped Ashes may be the first high- definition, fully computer-generated content to be animated, composed and rendered with a realtime interactive graphics engine and then recorded to film for integration within a widescreen feature film experience. If there are any other crazy people out there trying this... I really want to meet them.

EA art director Habib Zargarpour and his team at EA Black Box have come up with a further cutting-edge technology called ICE, which stands for In-game Camera Editor. Its features involve being able to create any kind of camera sequence, including editing, keyframing, camera positions, composing shots, which you want to produce, such as a car commercial or previously animated scenes with actors.

The basic strength of the process is that we have realtime rendering in high definition, explains Zargarpour, and all the camera positioning and editing is done in realtime using the strength of the Xbox 360. We have the ability to generate high-definition images with realtime lighting and manipulate the position of the sun, manipulate cars, characters. The core of the system is based on the ability to animate the camera and edit the sequences all on the fly with one controller. So this makes the system extremely fast because everythings running in realtime in high-definition at 60 frames-per-second. Youre not waiting to develop or export anything. Youre not waiting for conversions. Everything is being rendered in realtime.

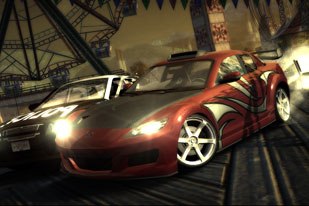

The team at EA has built a continuous virtual city for Need For Speed: Most Wanted (from videogame producer Michael Mann), in which ICE was used for all the realtime cinematics. So, for example, if you were doing a car commercial, you can find a location using virtual location scouting anywhere in the city, which has 120 kilometers of road. You can find an area where you want to perform the shot, select your car and customize it any way you want, pick the time of day and the weather. You can drive the car in the game engine and do the move or stunt that you want. Then at the press of a button you can start editing your shots to it.

What about the quality? Is it life-like or does it have that CG-look. What you get from this system is crisper. It may not be like a 100% reality, but its at the level youd want it to be for a car commercial. I joke with my friends that weve already emptied the streets for you. You dont have to worry about people running around theyre cleared already. You can select the time of day and the weather you want. You can basically put the camera wherever you want. Weve got cameras locked to the car or following the car or cameras that are in the world.

It sounds like ICE might put production assistants and weather forecasters out of business. But will it need additional equipment or software to operate? Its a stand alone system. You wont need any other CG software if youre just doing the car commercial aspect of it. However, if youre having animated people, theyll have to come from a different package. Using 3ds Max or Maya you can animate the characters and bring them into ICE. Once youve done that you can edit the scene pretty much however you want. You have at your fingertips full controls for what lens youre using, depth of field in realtime, in-betweening of the camera positions, all the lens controls you would want.

How could it be used for feature films? The level of realism is not quite a 100% that of live-action filming. Within the context of wanting to show a nice car, thats beautiful and clean and you can see the metal flake in the paint, extremely detailed, this could definitely be the thing to use. You can certainly use it to plan action shots or car chase sequences for live-action. You could basically do all your previs in realtime. One of the things I pointed out at the DGA Digital Day event is that wed like to take the pre out of previs

As the camera engine EA used to create Need For Speed: Most Wanted on the Xbox 360, ICE offers some interesting interactive possibilities for users: One thing people can do off the shelf if they buy the game is, in fact, move the sun around interactively. They just pause the game when theyre in the open world by pressing the Start button and select Options -> Video. That takes them to a little slider that sets the time of day. Youll see all the shadows move dynamically.

Although EA has no specific plans at the moment to make ICE commercially available, Zargarpour says that a lot of people he talks to get very excited. In general, people have been talking about the convergence of games and film, and this is one area on the tool side where its really happening. More and more people are using game engines to do visualizations for film. As the next-generation consoles are getting more powerful, the ability to match film quality [gets] closer and closer, thats certainly going to be an evolving thing over the next few years. So, its an exciting area where we can have a lot of things be interactive. It makes a huge difference, I think, in terms of even doing visuals for something that is computer-generated if you can dial in everything in realtime.

Meanwhile, the final emerging technology looked at was Paul Debevecs and Charles-Felix Chaberts experiments on Relighting Human Locomotion using Light Stages techniques at USCs ICT (Institute for Creative Technologies) Lab. The goal of this project is to make it possible to capture a full body performance of an actor so that in post-production one can freely move the point of view around the scene and change the illumination, Chabert explains. This makes it possible to film an actors performance in a studio and then photorealistically composite their image into any photographed or virtual environment with the correct viewpoint and illumination.

In their first project, they focused their work on capturing repetitive human locomotion for practical reasons so that they could effectively multiply their three cameras into many cameras. The relighting technique was built on previous Light Stages techniques (See www.debevec.org/Research/LS/, http://gl.ict.usc.edu/research/LS5/).

It is based on capturing many photographs of a still scene under many different lighting conditions. By combining these images we can then create a view of the same scene under novel illuminations. To capture an actor under different lighting conditions we place him at the center of a dome of LED light sources. The lighting conditions are then created by turning on a set of LEDs together. When the actor is performing, the scene is no longer static. Because of that we use high-speed cameras filming at about 1,000 frames-per-second. The lighting conditions synchronously change at the same rate. We then get about 30 different lighting conditions per video frame. Even if motion is happening during a 30th of a second, we can compensate for it using optical flow techniques. This allows changing the illumination of each video frame to get a full relighting of the entire performance.

They built Light Stage 6 in order to capture a full body performance. It is 2/3 of an eight-meter geodesic dome outfitted with 931 lights. We designed custom floor lights to behave how a Lambertian ground plane would behave. These lights have a Lambertian distribution to cast less light on the feet of the actor than on the upper body. To make it possible to control viewpoint, they needed to capture the performance from different points of view. Since we are capturing repetitive human locomotion, setting a vertical array of three cameras and having the actor walking on a treadmill, which slowly rotates on a turntable, each camera sees each walk cycle from a different point of view. In our case, the actor performs 36 walk cycles over a full turntable rotation, which gives us 36 viewpoints per camera. Then using a view interpolation technique we developed for this project, we can render the actor from any point of view.

The system consists of both hardware and software. For the hardware part, we built Light Stage 6 and developed the drivers and interfaces to control it. The capture is done using three standard-definition high-speed cameras, each with 8GB of internal memory, which allows us to film about 45 seconds of footage at 1,000 frames-per-second. For the software, we developed internal tools for the preprocessing of the captured data. We developed techniques to compute relightable shadow maps and alpha matte images. We developed a GPU renderer used for previewing and for producing the results. We used Maya to generate the actor subject and camera path, and Shake to composite the shots used for the SIGGRAPH 2006 computer animation festival.

What does Chabert see as the most impressive aspect of their work so far? This is the first time that such a data set spanning time, illumination and viewpoint has been captured, and its the first time that one has the possibility of photorealistically changing the illumination and the viewpoint of a captured live-action performance. The only manual effort to achieve a realistic result is the scene setup, positioning the subject and the camera in Maya, and finally compositing the result.

Chabert imagines that such techniques will have an impact on the way live- action performance is mixed with computer-generated environments in the near future. An artist could take the performance data, manipulate the performance as a 3D object, place it in a 3D environment, according to the filmmakers wishes, and render the performance and the 3D environment as a whole with consistent point of view and illumination.

J. Paul Peszko is a freelance writer and screenwriter living in Los Angeles. He writes various features and reviews as well as short fiction. He has a feature comedy in development and has just completed his second novel. When he isnt writing, he teaches communications courses.

| Attachment | Size |

|---|---|

| 3014-homelands03c05.mov | 1.48 MB |

| 3014-trappedashes.mov | 3 MB |