VFX supervisor Axel Bonami details MPC’s development of new shooting techniques and software tools to create the stunning visuals for the live-action adaptation of the popular Manga series.

Paramount Pictures’ remake of the 1989 Japanese Manga series Ghost in the Shell is a futuristic visual effects spectacle that tries to pay homage to the anime world in live action. Directed by Rupert Sanders, the film stars Scarlett Johansson as Major -- a woman whose brain is implanted in a cyborg body after a terrible crash nearly takes her life. Her new cyborg abilities make her the perfect soldier, but she yearns to learn about her past.

Ghost in the Shell was filmed primarily at Wellington, New Zealand’s Stone Street Studios, with additional shooting in Hong King and Shanghai. WETA Workshop handled the on-set, practical effects, but to fully realize the futuristic world of Ghost in the Shell and some of its fantastic cyborg creatures, the filmmakers tapped international visual effects facility Moving Picture Company as the lead VFX vendor, giving them more than 1,000 shots. The complex work required a close collaboration between the director, production VFX supervisors John Dykstra and Guillaume Rocheron, and MPC’s teams in Montreal, London and Bangalore led by VFX supervisors Arundi Asregadoo and Axel Bonami.

Several other VFX facilities also contributed to the film, including Atomic Fiction, Framestore, Method Studios, Raynault VFX and Territory Studio.

Bonami explained that MPC’s work included creating futuristic buildings and cityscapes, holograms, “ghost cam” fly-overs of the city, extensive set extensions, digital doubles and digital crowds, as well as elaborate sequences like the shelling sequence, the deep dive sequence, the spider geisha and Major’s thermoptic invisible suit. He added that the facility had about nine months to deliver the final shots, although VFX sup Rocheron, also from MPC, was involved in preproduction planning for almost two years, along with Dykstra.

“We work globally, so we have different departments in different locations,” he explains. “We have some of our asset work and look dev work that is based in London with the help of Bangalore. Then internally in Montreal, we had two units that were covering around like, 700 to 800 shots. Then we had a Bangalore team as well taking over 200 to 250 shots.

“So we kind of split in different units, depending on different sequences and that allows us to have a higher workload,” he says. “I think we had like almost 800 artists work on the show globally. And at its peak, we had 600 artists working at the same time, in the last month.”

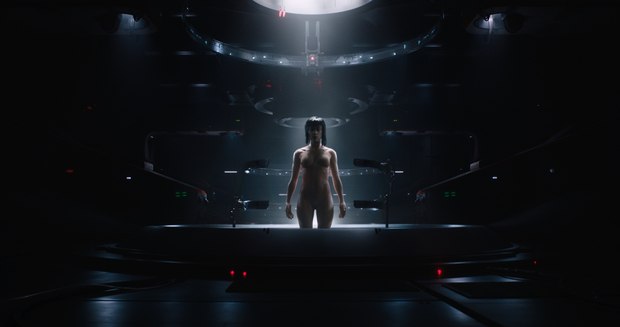

The film opens with the shelling sequence, which shows Major being “shelled” inside her new cyborg body. The sequence, (which pays tribute to the anime version), was created using a blend of computer graphics and practical animatronics.

“We shot the entire sequence with a prop and then replaced the body with the full CG, matching camera-moves and integrating it into the created set,” Bonami recounts. “Visually it’s quite strong, and we spent a lot of time on the shot composition and on the colors. Jess Hall, the DP, did really cool work on the color palette in the movie that was established very early on. All the lighting was based on that color palette that we had on our end as well.”

Bonami explained that MPC created a skeleton and musculature for a digital Major during the shelling sequence that allows audiences to see through her into the negative spaces inside. Her face, however, is real. “We do that a lot these days -- hybrids, where we use the actual actor… then we just keep the facial features that we can then apply to CG panels on the face,” Bonami says.

One of the biggest tasks for MPC was to create the movie’s iconic world. The story is set in an unnamed, multicultural, futuristic city that had to be transformed from the original anime version into a photoreal environment. The visual effects team created a library of futuristic buildings and vehicles, elevated highway systems and traffic and crowd simulations.

In addition to background shots and set extensions, the city is showcased in technically complex CG flyovers in the film, in shots dubbed “Ghost Cams.” These shots helped set the stage, and were integral to the storytelling.

One of the biggest challenges was the creation of the gigantic holographic billboard advertisements that clutter the cityscape, called “Solograms.” The city is filled with them. In total, MPC created 372 Solograms in different sizes and shapes to populate the city shots.

Bonami explained that to capture these photoreal volumetric displays, the movie’s production team used a new custom-made rig, built by Digital Air, consisting of 80 2K cameras running at 24 fps, to capture volumetric footage of actors, which could then be mapped into the Solograms using photogrammetry.

Digital Air’s “4D scanning systems” record full body performances in 3D. The results can be integrated into composite shots with final lighting and camera movement deferred to the visual effects team.

Digital Air also helped MPC develop a workflow to bring those elements into their visual effects pipeline and helped the company’s R&D team develop new software tools to enable them to reconstruct, process, manipulate and layout the volumetric data in the shots. Processing the data involved “solving” moer than 32,000 3D scans.

“There were two steps on the solograms,” Bonami recounts. “The solograms were the big billboards in the city, that’s why we shot with a rig of 80 different camera angles. Then we just apply photogrammetry to it, which is where we generate the geometry with texture painting for every single frame.

“Then we created a tool to convert all the animated clips into a possible version of it. That means we were applying a 3D grid to it to create the pixels,” he adds. “Then we could create different sizes of ads, and the different spacing and different quality of billboards. In parts of the city where they have a lot of money, there are very high density, high-res ads. And then when you are in the most dodgy part of the red-light district, then it starts getting a bit more pixelized and there’s a digital artifact to it.”

Bonami added that they also created a low-res version that they could show to the director and use as a guide to lock down the layout. “Then it was rendering, having both the light interaction of the Sologram itself bouncing into the buildings, as well as lighting the environment.”

In an abstract scene dubbed “The Deep Dive,” Major navigates around a Geisha bot’s memory. MPC’s artists rendered and simulated full CG characters decaying as time passes, varying the clarity of their representation based on the viewing angle.

Bonami explained that a 150-camera DSLR rig was used to capture a static CG version of the actors frozen mid-movement, so they could be recreated digitally and deconstructed during the sequence. The sequence also called for transition effects and crowd simulation.

Major’s thermoptic suit -- a second skin that allows her to become invisible -- and its invisibility effect are shown in a number of sequences throughout the film, from the skyscraper jump to the water-filled courtyard fight. For the courtyard fight sequence, the complexities lay in blending a 360-degree full CG environment, built to recreate the look and scale of the original manga, with practical and CG water effects and the thermoptic suit’s invisibility effect.

Bonami explained that Johansen was shot practically, (in a costume designed by Kurt and Bart with WETA Workshop), but the visual effects team was able to use a CG double to hide any folds or creases in the suit, “to just kind of thin out the suit so it’s really, really tight. It was invisible but we made it physical in a way. The challenge of creating an invisible suit was creating an invisible, visible suit.”

The final spider geisha sequence showcases an array of digital character animation, CG environments and explosive destruction simulations. The fully digital environment was partially re-created using 2D elements taken at a Hong Kong location mixed with other types of architecture in order to create a unique location.

“We also had the spider geisha itself, which had its face open, and then breaks out the limbs and walks like a spider, which was shot, partly practical for the first part of the sequence, and then moves into full CG when she breaks out the limbs and climbs up the wall,” explains Bonami. “The face opening was computer-generated as well.”

Overall, Bonami says that every shot posed its own unique challenges, technically and look-wise, but perhaps the biggest challenge was getting the vision right and making sure they were serving the story, rather than “showing off.”

“Some of the visuals of the city are quite eye-popping, but...the VFX works well when you don’t get distracted by it, but then afterword, you think, ‘Oh maybe that’s just CG,’” he says. “That’s when our work is done.”

“What I think what works visually in this movie is the combination of having really nice set design, costume design and then the visual effects -- putting all that together, blending live action into computer generated, and then having all the input of all these departments,” Bonami concludes. “For me, the best is when we blend and connect of all the different parts of movie making, and not having the actors on the full greenscreen, not always knowing what they’re doing, against a full computer-generated background. I just really prefer to top-up and blend. While we do end up with fully computer-generated shots, when you jump from one to the other, there’s no knowing what part is real and what part is fake.”

Scott Lehane is a Toronto-based journalist who has covered the film and TV industry for 30 years. He recently launched VRNation.tv -- an online community for VR enthusiasts.