Bill Desowitz uncovers what new wrinkles Sony Pictures Imageworks came up with in conquering Beowulf, the new performance capture hybrid from Robert Zemeckis.

When director Robert Zemeckis was in the middle of riding The Polar Express a few years back, he already knew that he would make Beowulf in the same performance capture hybrid method. Fortunately, the technology has improved enough to pull off his adult animated vision of the oldest surviving example of Anglo-Saxon heroic poetry -- meaning a lot more realism.

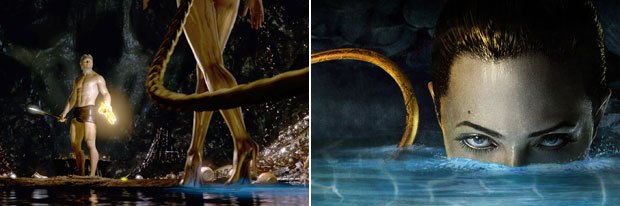

Indeed, The Uncanny Valley is not quite as uncanny, thanks to advancements in performance capture techniques and 3D animation at Sony Pictures Imageworks. Just look at Beowulf (portrayed by Ray Winstone as both a young and elderly man), or King Hrothgar (played by Anthony Hopkins), or the monstrous Grendel (performed by Crispin Glover) or Grendels mother (captured by the one and only Angelina Jolie). The layering of detail upon detail is striking: their eyes are a bit more authentic, their skin a lot more revealing and their movements more believable. Likewise, the hyper-real environments and VFX are a result of other breakthroughs at the studio. Coupled with refinements in stereoscopic transfer, Beowulf (which opened Nov. 16 from Paramount Pictures) is the latest poster child for 3-D in all its immersive glory. And rightly so, given that the 3-D engagements in IMAX, Real-D and Dolby accounted for nearly half of the first weekends box office gross. No wonder theres potential Oscar talk in both the animated feature and VFX categories.

Details, details, details seemed to be the mantra, according to Visual Effects Supervisor Jerome Chen, who also worked on The Polar Express. And Chen really didnt appreciate the full extent of this until first viewing Beowulf in 3-D. I have come to realize that this should be the native format that audiences should experience, Chen suggests. Bobs movies are always instinctively 3-D and, like James Cameron, he understands the stereoscopic aesthetic, because he likes objects to come up to the camera and he likes to break the screen plane and come out toward the viewer. And whats fascinating to me is that you get to look around at things and we have an incredible amount of detail in Beowulf, both geometric and textural in our models and the clothes and the faces. I had forgotten how much stuff the artists had put in there.

In terms of stats, there are probably only 800 shots in the whole film. Bob likes to have very long concept shots. He likes to connect the story into one long camera move and show all the different perspectives. However, it not only makes for incredibly long shots but it also complicates things as you pan from one side of an environment to another. You have to light two directions and then you have longer rendering times. And in Bobs case, you always have some other effects element that has to be incorporated.

Here are some other Beowulf stats:

297 unique characters variations

28 primary characters, each often requiring multiple costume and hair styles

15 secondary characters, also required full body hair as well high- detailed cloth models

20 large-scale environments, ranging in size from the 1,000+ prop packed Mead Hall to vast Oak tree lined Dark Forest

1,000s of modeled props and set pieces: ranging in complexity and size from your simple spoon and fork, to the standard era sword and shield and ultimately to large-scale siege weapons

250+ unique character props

When we started talking about how to do Beowulf, we didnt know that we were going to go this realistic and detailed -- that evolved over time, Chen continues. The evolution of that was predicated by the motion capture performances of the characters and how the keyframe animation was applied on top of them. As we looked at these characters, we realized that we wanted more and more detail on the faces, on the clothes, on the world. The performances of the characters were so big in scope. We noticed that these faces needed more muscle detail, more texture in the eyes, eyelashes to have certain detail, the hair on the head needed to be more detailed. It got to the point with Hrothgar that we wanted to get so close that we could see hair in his ears and nostrils. And then when the light hit the side of his face, we wanted to see peach fuzz.

For Beowulf, Imageworks developed new human facial, body and cloth tools. Beowulf was a challenge, of course, because he looks nothing like Winstone and is portrayed at different ages. Grendel is another interesting character, Chen suggests. We were originally going to keyframe him, but Bob wanted to use Crispin Glover to capture his tortured soul. The intent was to make him look like a giant mutant child suffering from every known skin disease. We had to dial him back so he didnt look like a corpse using new RenderMan shaders for the look of the skin. Because hes 12-feet tall, the animators had to create a different sense of timing, height and momentum but stay true to the performance.

According to MoCap Supervisor Demian Gordon, they initially pushed the volume during the capture sessions. We maintained the height and captured 16-21 person shots. We used a much higher marker count, so we had full hands on Beowulf for the first time. We also had a high-res face on Beowulf for the first time. Our ability to see markers improved and had less cameras overall. We used 250 Vicon cameras with custom software for Sony that only four people in the world know how to run. We had 18 capture systems wired together in one super system.

Because of the nature of Beowulf and so many people in the volume in the Mead Hall or during the dragon battle, it required us to come up with an elegant solution for keeping track of various props. No props were captured on Monster House but all of them were on Beowulf. We color-coded every prop so you could tell what you were seeing in the video reference. If there were 16 swords in the video, you needed to know which particular CG asset went with that physical prop. Everything that went into or came out of the volume, we bar-coded. We had the equivalent of a grocery clerk checking all the data. File sizes went through the roof, with all these extra markers, so we made a complex system of slave PCs that listen for every capture and when each capture is done they chop it up into manageable bits and then process them and piece them back together. Even so, we had to hack into Windows to deal with the large data sizes. We had to stream data in one big chunk with a lot of automated processors, but in the end its very organic kind of filmmaking that you get because the actors perform and then Bob gets to execute his vision without being aware of all the things weve implemented on the backend.

For greater detail, the solving was adjusted to merge two capture systems utilized on Polar Express and Monster House. On the former, the markers on the actors face moved the skin directly and on the latter they were interpreted to trigger certain shapes and poses on the face. For example, you can keep Anthony Hopkins facial movement to some degree but can change him into his character much more controllably. This added to the sense of realism and resulted in crucial ease of use for animators. They have the ability to both turn on a particular pose or shape or can go down to the muscle level and play with jiggle. So they have two sets of tools to play with in one superset.

Keyframe animation on top of the performance capture is still key in these hybrid features, as they are not yet able to animate in a single pass. Imageworks animators keyframed mouth, tongue, cheeks and brows to attain greater subtlety and later added cloth, hair, lighting and vfx. On Beowulf, they developed 125 controls to mimic facial expressions and an even greater number of muscle controls. They also pushed the expressions further by adjusting the rig and readily admit that female characters are a lot less forgiving than males, so they required additional shading and lighting tweaks. And they even made tools to go back to animation and fix performances after lighting (with lots of eyeline changes).

The eyes, of course, were most crucial. According to Animation Supervisor Kenn McDonald, it begins with the EOG system (four electrodes around the eye sockets), which runs the information to drive the animation in the actors face. In discussing eye movement and physiology with specialists, the animation team discovered that the hardest part in crossing The Uncanny Valley is the involuntary eye movements -- and thats what they set out to accomplish through improved EOG, shading and CGI.

Then there were the environments, which had to have the same level of detail as the characters so they wouldnt stand out. There was always a wealth of photographic detail that we could get, Chen offers. We always want to incorporate some level of reality in the textures. We would find photos of stone and castle walls and incorporate that into environments.

As the story progresses throughout 40 years, most of the action occurs inside Heorot Castle (new and old versions) and later across 100 square miles of coastal Denmark during a battle with a mutant dragon.

Part of the challenge is designing dynamic sets and creating believable textures, Chen adds. We made a stylistic choice in having Mead Hall inside the castle expand. The ceiling becomes three times its normal height when Grendel arrives for his horrific attack. The lens is wide at the same time: a combination of a wide-angle lens and taking advantage of the visual medium and growing the set.

For the dragon attack, we were very detailed on ground and in air. We were clever about picking and choosing where to show trees and shrubs. We used matte paintings [working in Cinema 4D and Photoshop] for larger environments. Its an identical philosophy to making a live-action movie. At this point, the line is blurred between live action and animation. I used everything I would have for live action. The difference is that Im now creating the main characters as an illusion too, which is an outgrowth of what we did on Stuart Little, only now with humans.

This was the largest vfx crew assembled at Imageworks, with a team of nearly 450. Chen says it exceeds even the Spider-Man franchise in terms of the wide range of effects. Usually we do fire or water continuously. Here we had to do all of it, including scenes where Beowulf fights sea monsters during a storm at sea. We did full water simulation and additional particle work for interaction and characters in the water: the rain, the storm and mist blowing off.

We basically re-wrote our front end of the pipeline for Beowulf to be more efficient in data management. It got to the point on previous movies where it could take 45 minutes just to open a Maya file. And saving used to take 15 minutes. We designed the system so it would load very quickly and save time literally from minutes to seconds. When we broke apart the scope of the movie on a technical level, we had about 100 shots with more than 20 people, sometimes up to 100. We needed to have a system allowing you to selectively edit one or two people and not have to re-render everything when reviewing the animation. We came up with a method where you could cache different levels of your render and then do a Z-based composite and layer everything together. It sounds so elementary but requires an enormous amount of data management tools that were created in-house. We spent four to five months writing this infrastructure. Data wise, we even broke the efficiency that we created for animated films. This was a lot more complex.

Fortunately, Imageworks was able to leverage some of the technology from previous features, including Katana, the lighting software introduced on Spider-Man 3. We realized early on that there were so many components coming into lighting, which needed Look Dev standards, that we need to tie in an asset management system, explains CG Supervisor Theo Bialek. We developed a plug-in for Katana that allowed all those components to come from different departments to go through an approval process and once approved they were added to a lighting workspace, which lists what should go into each shot. We kept track of approved assets from animation, cloth, which was driven off animation, and hair, which was driven off the cloth and animation. We had an automated asset system that would know when a new version of an asset was coming through, and so a lighter was always working off the most recent version of that asset for that particular shot. It meant that anytime a change happened down the pipe, the lighters were immediately notified and updated with assets.

The shot lighting team did 210 shots out of 700+ in the movie. Doing ambient occlusion wasnt possible on this grand a scale with 100 characters in close quarters in a Mead Hall with different light sources. Rendering shadows for all those lights and all those characters interacting was another challenging solution. We used brick maps for ambient occlusion. So in a large environment where people dont move, we would cache that data. But you also have to deal with the elements that are moving, that are changing from shot to shot that you cant cache, such as characters moving on a table or a prop being picked up. We had a clever system of combining cached brick maps with camera projection-based ambient occlusion. We also used indirect lighting, which has been gaining popularity in RenderMan. We store the radiance cache in a point cloud data file format, which stores the diffuse and specular lighting in shadows. We use that to do a single bounce pass, which is very useful if you have a bright spot on a character, and you can bounce light into the dark areas. This adds an extra level to the style they were going for. With such high contrast lighting, the extra bounce pass helped soften nuances around the eyes of characters.

For rendering so much data, they decided to use a foreground occlusion rendering method. You use the alpha of your foreground render and you erode it in, and you need to allow extra pixels there so that when you do depth of field in layering, you have something to blur into. But we saved 50% rendering time when you have characters moving around in the foreground. This was new to Imageworks.

They also leveraged Surfs Ups wave animation technology, which was modified for the opening shots of the ocean and the storm at sea and inside the cave where Grendel and his mother live. The water inside the cave was created using shaders on a flat plane with particle simulations in Houdini for the splashes. For the opening shots of the ocean, the vfx artists created 3D water using soft body simulation in Houdini and particle simulation for foam, sea spray, rain and fog. Waves modeled with Surfs Ups system crashed water onto the beach.

Meanwhile, the fire pipeline was leveraged off of Ghost Rider (Houdini driving Mayass fluid engine), especially for the mayhem created by the dragon. Its a system that can art direct the look of the fire. The golden dragon, which is the only fully keyframed CG character, looks almost human when it stands on two legs and reaches up, but is more familiar in other respects, save for its bat-like wings. Artists also used the Sandman fluid engine from Spider-Man 3 to help drive the snow thats impacted by the dragons wings.

VFX Lead Vincent Serritella co-developed the fire pipeline. Jerome and Bob were going more for photorealism here, he says. We changed the render solution to an in-house volumetric renderer that was written by Magnus Wrenninge. This was beneficial for flying through the effect and not have the image break down. We added smoke effects to fire for more natural lighting integration. They additionally created bioluminescence for the cave sequences: this provided great interaction with water and added greater appeal as a light source. Reflective shimmering and gold lining on cave walls gave off a nice blue phosphorescent feel.

They also developed a fire library system to accommodate so many static fire sources (torches, sconces, candles and fireplaces) in a variety of situations and environments. We wanted Jerome to call up a different variation [via a Katana plug-in] without having vfx artists involved, Bialek explains. The lighter would handle Jeromes requests, and render using a proprietary sphere volume renderer.

Chen adds that it was vital to rewrite the backend of the pipeline as well. Rendering large crowd scenes needed to be started months in advance of the actual shot work in order for the layers to be prepared for the lighting and compositing artists to have something to work on. So we came up with a specific rating system in the render queue to prioritize shots for the artists. Even though we had several thousand processors, some of these jobs could take 200,000 processor hours to render because of all the layers that had to be made. So while you were waiting a month to render the Mead Hall sequence with nearly 100 characters, you would be working on short shots with a couple of characters. Upgrading the whole company to multi-core processors became its own engineering challenge. They may be blazing fast but suck up a lot more power and need a lot of cooling requirements. We exceeded space on the lot so we had to have little rendering nodes all throughout Culver City.

Bill Desowitz is editor of VFXWorld.