Steve Sullivan, director of R&D at Industrial Light & Magic, tells Barbara Robertson about the new interactive previs system ILM is developing.

With the prevalence of previs growing exponentially over the past few years, companies are trying to improve the process, giving directors more control over previs creation. Many have looked at Machinima as a possible solution. However, Industrial Light & Magic is taking that idea a bit further. Steve Sullivan, director of R&D at Industrial Light & Magic, tells Barbara Robertson about the new interactive previs system ILM is developing.

Barbara Robertson: During his keynote Q&A at last year’s SIGGRAPH, George Lucas said he wanted to have an interactive previs system and hinted strongly that it was under development. Is it?

Steve Sullivan: ILM has been poking at this problem for quite a while, often on shows, and George has always been interested in previsualization. But he never had the interactivity he wanted. Basically, right now most people do previs in Maya. Previs is a huge improvement, but it’s not a good tool for brainstorming. The director usually tells a previs artist, “Here’s what I want. I’ll see you tomorrow, or maybe next week.” In March last year, after Episode III, George said that he really did want a system he could use himself, and that ILM needed to make it. He wasn’t going to buy it from someone else. At that point, we were moving the pipeline onto Zeno.

BR: Didn’t you use a game engine to give Steven Spielberg a previs system on a laptop that he used for AI?

SS: That was an interesting experiment. We also made a system for the Hulk, for Ang Lee. Some of the R&D guys put an interface on top of the XSI realtime viewer. It had limitations, but it was a good step toward the tool we’ve built now: an interesting proof of concept. You could bring in a baked scene and move the camera around, so you could play with shot blocking. We also helped create a Maya-based system for Episode III that improved the interactivity a bit. But, we were hamstrung in terms of how much we could do differently.

BR: What mandate did George Lucas give you?

SS: The mandate was broad. We knew a little about what he wanted from previous previs systems: It had to be simple, simple, simple— simple enough for George to use. He said, “Directors should be able to sit on the couch watching TV while they mock up their shots.” It gave us a certain focus. But the target audience was also 12-year-old kids. George wanted a system that could teach people how to make movies: something that changes how things are done.

BR: Where did you start?

SS: We threw away all of our UI concepts and started from scratch. We couldn’t have the screen cluttered with widgets and check boxes; it had to be about the images. We have context menus, but not toolbars. We also came up with some interesting input devices. You don’t have to use the keyboard or a mouse. You can use game controllers, pan and tilt wheels on the set, multi-degree freedom joysticks. We’re agnostic about input devices. You can sit on the couch and use a game controller to move an image on your TV. Or, you can sit at a desktop and use a keyboard.

BR: So, how far along is the system now?

Steve Sullivan, director of R&D at Industrial Light & Magic.

SS: We have an internal system working now that we’ll shake out and make slick enough to be a product. We have no plans to market it now, but it needs to be consumer friendly.

We had five people working on it from March until October last year when we had the first demo. Then, we scaled the team down to work on stability. The people all came from ILM, so the system addressed their needs. Now, the team is back up to five to support people who are actively using it to develop the animated [Clone Wars] TV series. And, we’re working on getting the LucasArts game engine to be the default viewer in Zeno, so the previs viewer could use that rather than the one in Zeno. The game engine has higher quality visuals, is optimized in terms of lighting and performance and incorporates realtime physics.

It’s a production ready system. It runs on standard PCs and on laptops. It’s running under Linux now, but we have Windows machines. We could set someone up with that gear.

BR: Does the system have a name?

SS: We’re calling it Zviz.

BR: How does Zviz work?

SS: It has three modes. In one mode, you can build the set, a second mode is for animation and shooting and the third mode is for editing.

BR: OK. Let’s start with the first mode you mentioned. How does a director build a set?

SS: A director would probably have a world that’s already pre-built, but the capability is there if he’s in the middle of blocking out a shot and decides it would be great to have a doorway or an alley in the background.

We have a generic asset library with proxy props, automobiles, characters and so forth, so someone using the system has something to work with. You can bring in objects from the asset browser, assemble them in the world and scale them. The objects are simple. Some are on animatable cards like sprites. This is the front-end part of the process, the creative process, not the finishing process.

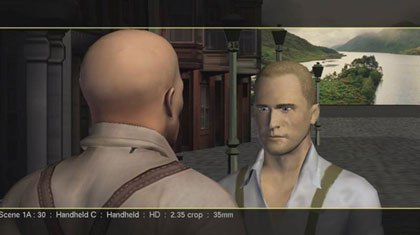

Inspired by games, Zviz could come back around and influence the production of future games like sequels to the LEGO Star Wars II: The Original Trilogy franchise.

Another important aspect is support for sketching and annotation. The system has interactive tools for drawing primitives, so if you’re missing a prop, you can sketch it in 2D. The 2D sketch becomes an animatable asset in 3D that you can use as a proxy. You can also sketch a note on an asset in 2D — say you want something brighter or taller — and it lives in the 3D world. You can draw facial expressions on characters and flip through them.

So, if the director can’t express what he wants with the world built for him or the library of assets, he can make a note or draw a sketch within the system to tell everyone exactly want he wants.

BR: How does the animation and shooting mode work?

SS: This is where you block out a performance, set up the action in the scene and fly the camera around to create shots. You can do different takes and keep multiple versions of a shot in memory at the same time to compare and contrast. Or, you can grab one version and make two more from that.

The system supports various camera rigs and more than one camera. You can have a free-floating camera, or you can use dollies and cranes. It has lenses and film backs — all the things a director thinks of. We have realtime lighting — game engine style lighting. It isn’t a final look, but you can have key lights, shadows, ratios. The system also supports physics, so you can have objects bouncing around and collisions.

One thing we heard from producers and directors is that the previs they get from people now tends to be unrealistic. The images are right, but the previs is not real in the 3D world and they can’t shoot it. They want freedom, but if a camera goes through a wall, they want to be warned.

BR: And the editing mode?

SS: This is where you can pull your shots together and sequence them. It’s pretty straightforward editing — you drag shots into a timeline and have in and out points. It supports audio so you can record into the system or grab pre-recorded audio clips.

BR: Does Zviz support compositing?

SS: We don’t have compositing built in, but because we built the system on Zeno, you could flip a button, jump into Zeno, set up a compositing tree and the system would import it. Sophisticated users could do anything they can do in the Maya-based system, but with the interactive interface designed for directors.

BR: Who has used the system?

SS: Lucas Animation is using the system for the TV series. Visual effects supervisors at ILM are starting to use it as well. It will probably be used at LucasArts for cut scene authoring in games.

BR: It reminds me a little of what people are doing with machinimation. How is Zviz different from what you could do with a realtime game engine?

SS: Well, mocking up movies in this environment is like the best machinima you’ve ever seen. We’re putting a game engine under a moviemaker’s tool to get graphics and speed — but the system goes beyond machimination.

If you really want to block out a movie, you don’t want machinimation. Machinimation isn’t built for a director who wants to block out shots, edit takes, do timelines and all that.

If you’re familiar with storyboards, you can even work that way. You can bring in 2D storyboards, cut back to 3D stuff and animate with the boards in that kind of workflow if you want.

We focused on the way a director wants to store shots, do edits and keep track of takes. We have asset libraries, sketching and annotation. The interaction with the camera mimics the real world.

It’s nice and direct. You don’t have to learn a lot of stuff. Everything is moveable — you can move things around, move characters around. You can pose characters and animate them. We show it to people and they get it right away. A director can reach into the picture, compose the shot the way he wants and the camera goes to the right place.

BR: So, is George Lucas using Zviz now?

SS: He hasn’t used it yet because he’s not at the previs stage with a film. I know he wants to get his hands on it, though.

BR: When can we see it?

SS: I wish we could show more people now. The time will come.

Barbara Robertson is an award-winning journalist who has covered visual effects and computer animation for 15 years. She also co-founded the dog photography website dogpixandflix.com. Her most recent travel essay appears in the new Travelers Tales anthology, The Thong Also Rises.